Enhanced Usage Analysis with Power BI - Part One

Introduction

Power BI is a very popular business tool and in many organisations we see a rapid and exponential growth in its adoption and use. Growth and adoption is a great thing but without some degree of guidance & structure things can get out of control. So how do we achieve a bit of control and governance over Power BI? Well in my opinion one of the first pre-requisites to control is "visability". If you can quickly and easily see and analyse what is happening in your enterprise Power BI environment then you can take action to encourage the good things that are happening and discourage the bad. Some of the questions that would be very useful to answer are:

- Who is creating content?

- Who is viewing content?

- What is the most popular content?

- How is content being shared?

- What are the trends associated with all of the above?

Straight "out of the box" the Power BI service provides quite a lot of useful information relating to this. See the two links below for a quick review of these features:

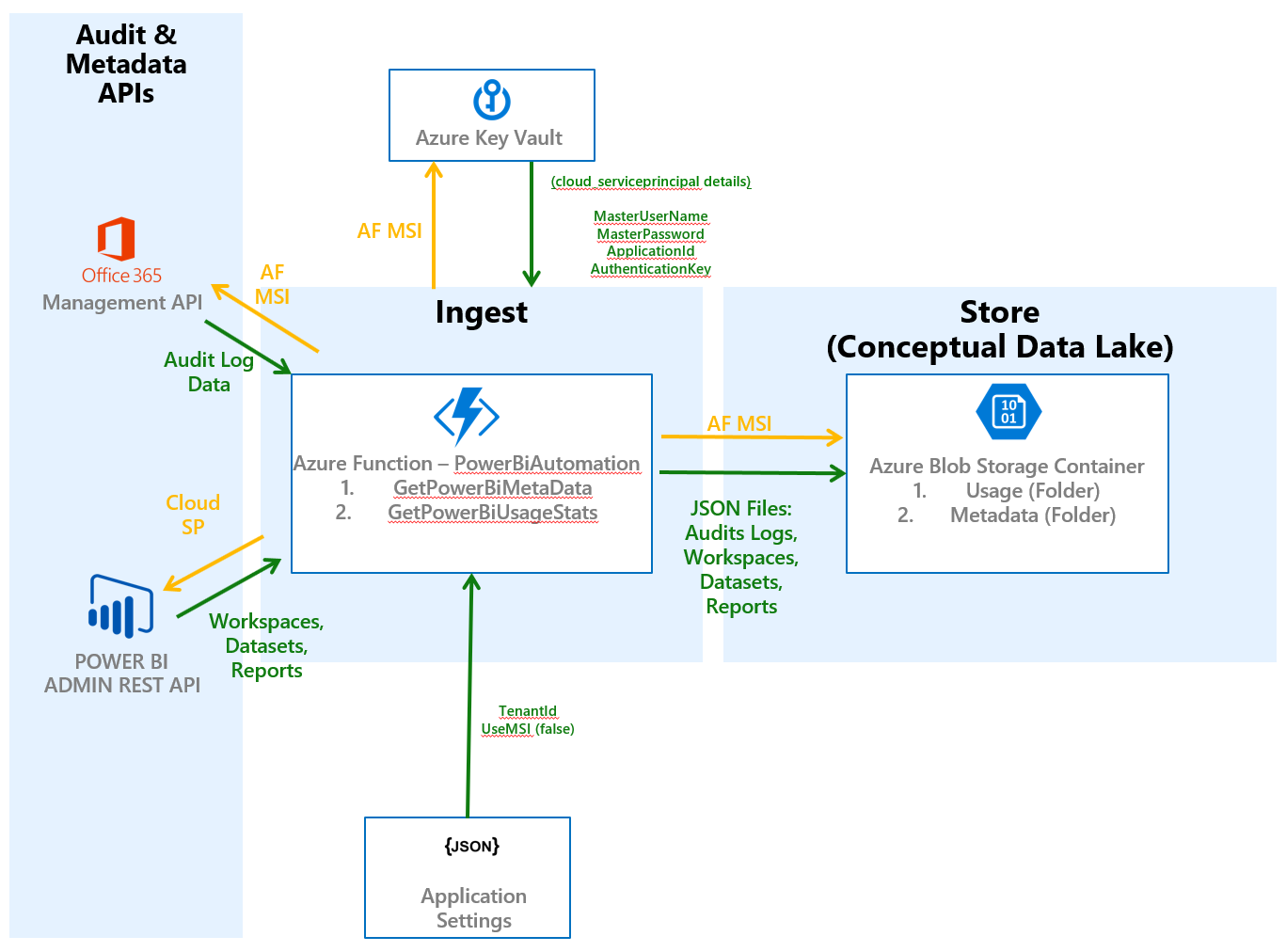

But what if you want to go beyond these standard features? What if you want to extended on these features to provide a comprehensive view of what is happening and integrate this into your planning and governance processes? Well, if that is you then you are in luck because that is exactly what this series of posts will be about. Over the course of 3 or 4 articles I intend to guide you through the process of implementing a full usage tracking and analysis solution for Power BI. The goal of the series will be to build out the architecture presented in the image below.

Information will be extracted from both the O365 Management API and the Power BI Admin API by a set of Azure Functions. This information will be loaded in a semi- structured format (JSON) into an Azure Blob Storage container. From there a subset of the information will be loaded into an Azure SQL Database. A rigid structure will be applied to the data as well as a series of business rules and validation procedures. A Power BI OLAP cube will then be built on top of this as well as a series of rich Power BI reports. I'll provide you with all of the template code to do this as well as step by step instructions. Once you have deployed the solution you are free to then extend it so that it meets the unique needs of your organisation.

As an added bonus it useful to note that the architectural pattern applied here can be re-used to do other very useful things. Its a fairly typical and popular pattern that you can use for a wide variety of reporting and analytical applications.

You'll be pleased to know that I won't tackle this all at once. This first article will deal with the first phase of the architecture only. I've highlighted what will be covered using the red over-lay in the image below. So this article will look at implementing the Azure Functions that will access the APIs, extract the relevant data and upload that data into a Blob storage container.

Information will be extracted from both the O365 Management API and the Power BI Admin API by a set of Azure Functions. This information will be loaded in a semi- structured format (JSON) into an Azure Blob Storage container. From there a subset of the information will be loaded into an Azure SQL Database. A rigid structure will be applied to the data as well as a series of business rules and validation procedures. A Power BI OLAP cube will then be built on top of this as well as a series of rich Power BI reports. I'll provide you with all of the template code to do this as well as step by step instructions. Once you have deployed the solution you are free to then extend it so that it meets the unique needs of your organisation.

As an added bonus it useful to note that the architectural pattern applied here can be re-used to do other very useful things. Its a fairly typical and popular pattern that you can use for a wide variety of reporting and analytical applications.

You'll be pleased to know that I won't tackle this all at once. This first article will deal with the first phase of the architecture only. I've highlighted what will be covered using the red over-lay in the image below. So this article will look at implementing the Azure Functions that will access the APIs, extract the relevant data and upload that data into a Blob storage container.

Power BI is a very popular business tool and in many organisations we see a rapid and exponential growth in its adoption and use. Growth and adoption is a great thing but without some degree of guidance & structure things can get out of control. So how do we achieve a bit of control and governance over Power BI? Well in my opinion one of the first pre-requisites to control is "visability". If you can quickly and easily see and analyse what is happening in your enterprise Power BI environment then you can take action to encourage the good things that are happening and discourage the bad. Some of the questions that would be very useful to answer are:

- Who is creating content?

- Who is viewing content?

- What is the most popular content?

- How is content being shared?

- What are the trends associated with all of the above?

Straight "out of the box" the Power BI service provides quite a lot of useful information relating to this. See the two links below for a quick review of these features:

But what if you want to go beyond these standard features? What if you want to extended on these features to provide a comprehensive view of what is happening and integrate this into your planning and governance processes? Well, if that is you then you are in luck because that is exactly what this series of posts will be about. Over the course of 3 or 4 articles I intend to guide you through the process of implementing a full usage tracking and analysis solution for Power BI. The goal of the series will be to build out the architecture presented in the image below.

Information will be extracted from both the O365 Management API and the Power BI Admin API by a set of Azure Functions. This information will be loaded in a semi- structured format (JSON) into an Azure Blob Storage container. From there a subset of the information will be loaded into an Azure SQL Database. A rigid structure will be applied to the data as well as a series of business rules and validation procedures. A Power BI OLAP cube will then be built on top of this as well as a series of rich Power BI reports. I'll provide you with all of the template code to do this as well as step by step instructions. Once you have deployed the solution you are free to then extend it so that it meets the unique needs of your organisation.

As an added bonus it useful to note that the architectural pattern applied here can be re-used to do other very useful things. Its a fairly typical and popular pattern that you can use for a wide variety of reporting and analytical applications.

You'll be pleased to know that I won't tackle this all at once. This first article will deal with the first phase of the architecture only. I've highlighted what will be covered using the red over-lay in the image below. So this article will look at implementing the Azure Functions that will access the APIs, extract the relevant data and upload that data into a Blob storage container.

A quick note on authentication, configuration and deployment

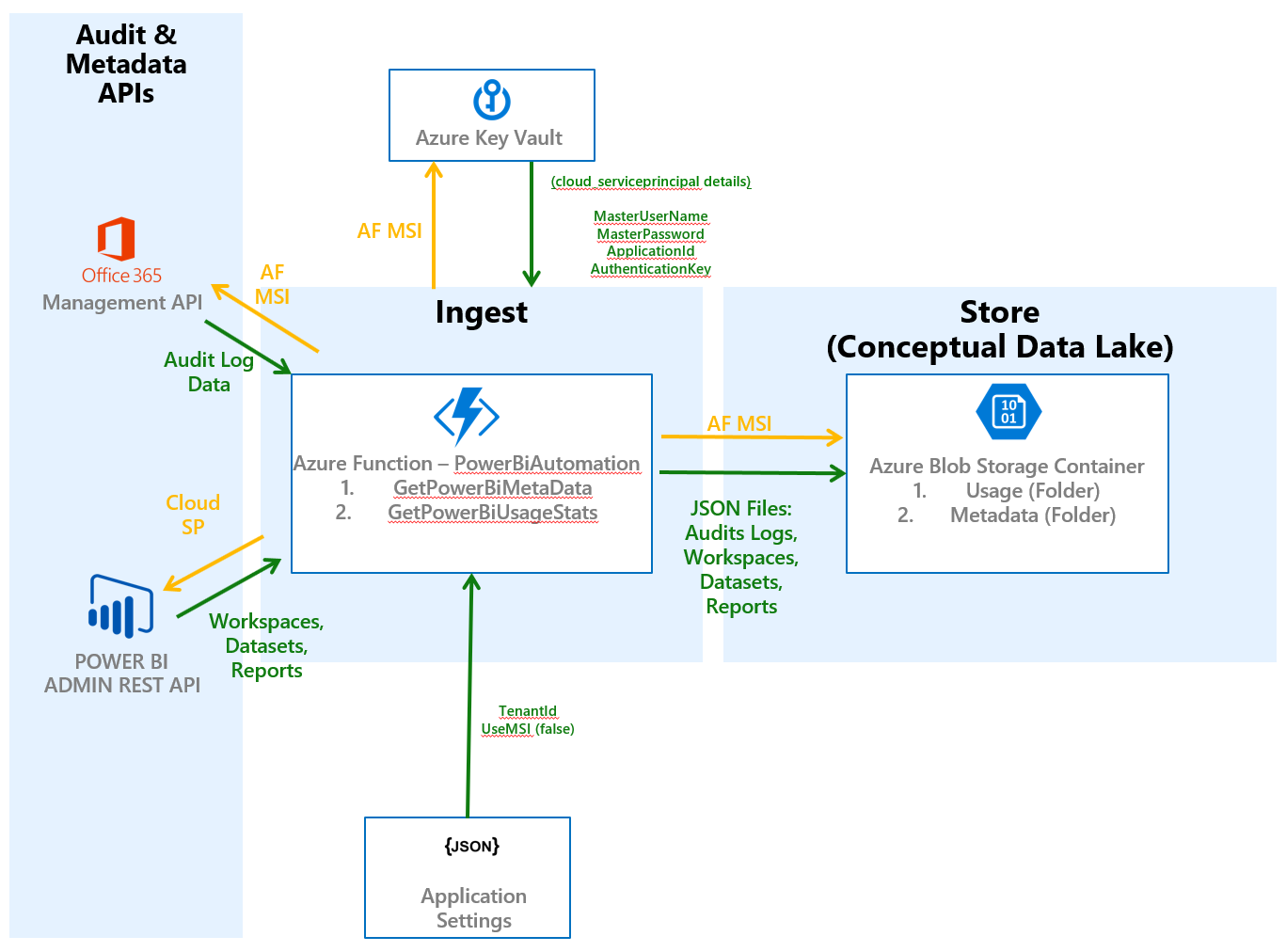

Azure functions can be executed locally using an IDE like "VS Code" or remotely within the Azure service. For local development and debugging it is essential that you understand how to configure local execution.

When running the functions in VS code you will authenticate with cloud services using two Azure Service Principal Accounts. The "localdev_serviceprincipal" will be used to access AzureKeyVault, The Office Management API and Azure Blob storage. The details required to authenticate the localdev_serviceprincipal will be stored within a local.settings.json that is stored on your local computer.

The "cloud_serviceprincipal" will be used to access the Power BI REST API. Note that the cloud_serviceprincipal will do this by using permissions "delegated" from Azure Active Directory user account which we will refer to as the Power BI Master User. The details required to authenticate the cloud_serviceprincipal will be stored within and accessed from an Azure Key Vault. See the image below for a visual representation of the data and authentication flows.

Data and Authentication Flows - Local Debugging

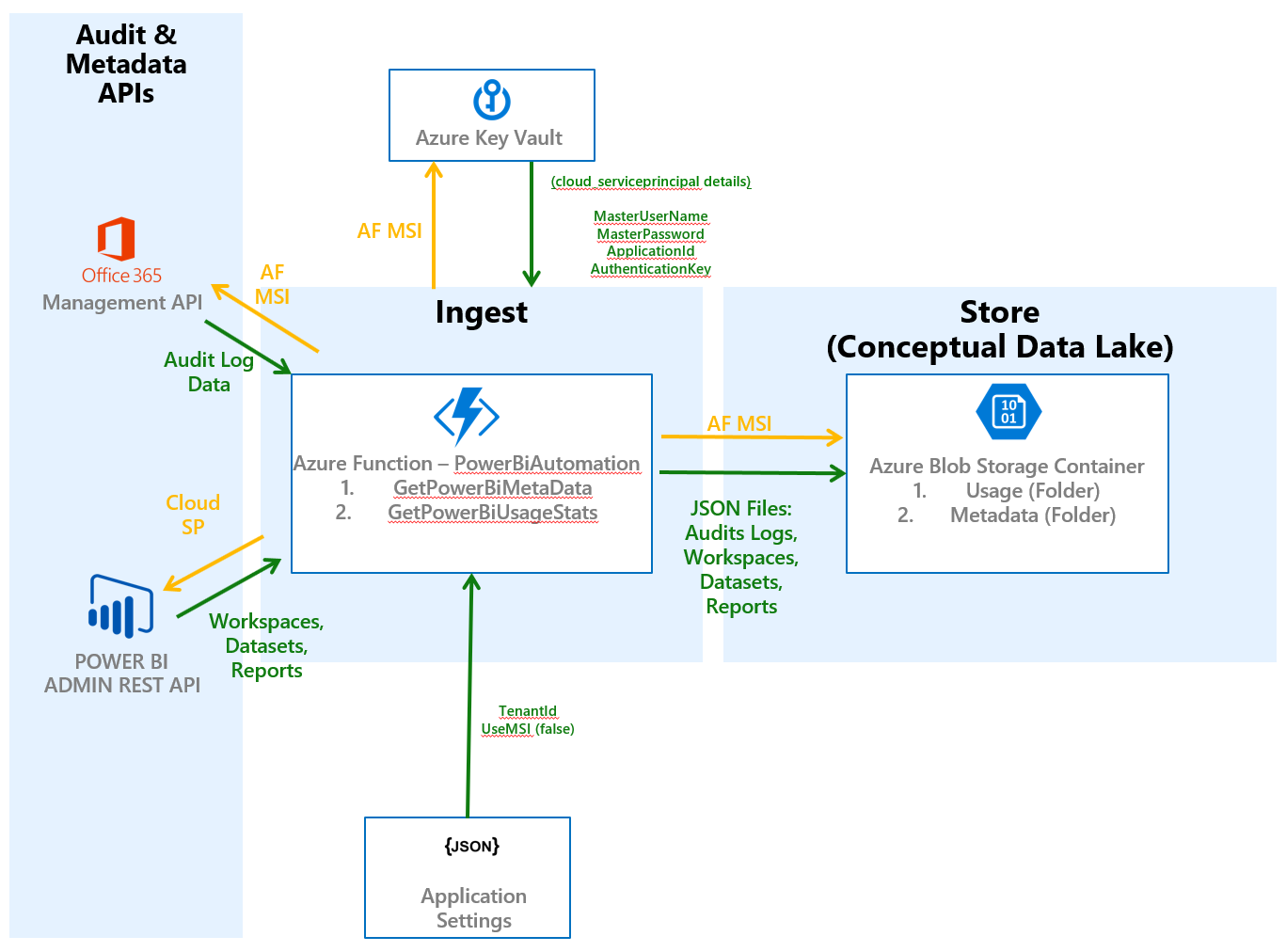

Once deployed to the cloud the localdev_serviceprincipal is no longer used. Instead the managed service identity associated with the Azure Functions instance is now used to access the Office 365 Management API, Azure Key Vault and Azure Blob storage. Note that the cloud_serviceprincipal is still used to provide access to the Power BI Admin API's. Also note that the authentication behaviour exhibited by the application is controlled by the UseMSI setting. For cloud deployment this is found in the Azure Function's application settings. For local development this is found in "local.settings.json". When UseMSI is "True" then the application will use the cloud based settings when set to "False" it will use the local development settings. Therefore, this value should be "True" in the Azure Function's application settings and false in "local.settings.json" on your local machine. The figure below depicts the data and authentication flows when the function is cloud deployed.

Data and Authentication Flows - Cloud Deployment

Once deployed to the cloud the localdev_serviceprincipal is no longer used. Instead the managed service identity associated with the Azure Functions instance is now used to access the Office 365 Management API, Azure Key Vault and Azure Blob storage. Note that the cloud_serviceprincipal is still used to provide access to the Power BI Admin API's. Also note that the authentication behaviour exhibited by the application is controlled by the UseMSI setting. For cloud deployment this is found in the Azure Function's application settings. For local development this is found in "local.settings.json". When UseMSI is "True" then the application will use the cloud based settings when set to "False" it will use the local development settings. Therefore, this value should be "True" in the Azure Function's application settings and false in "local.settings.json" on your local machine. The figure below depicts the data and authentication flows when the function is cloud deployed.

Data and Authentication Flows - Cloud Deployment

Azure functions can be executed locally using an IDE like "VS Code" or remotely within the Azure service. For local development and debugging it is essential that you understand how to configure local execution.

When running the functions in VS code you will authenticate with cloud services using two Azure Service Principal Accounts. The "localdev_serviceprincipal" will be used to access AzureKeyVault, The Office Management API and Azure Blob storage. The details required to authenticate the localdev_serviceprincipal will be stored within a local.settings.json that is stored on your local computer.

The "cloud_serviceprincipal" will be used to access the Power BI REST API. Note that the cloud_serviceprincipal will do this by using permissions "delegated" from Azure Active Directory user account which we will refer to as the Power BI Master User. The details required to authenticate the cloud_serviceprincipal will be stored within and accessed from an Azure Key Vault. See the image below for a visual representation of the data and authentication flows.

Data and Authentication Flows - Local Debugging

Data and Authentication Flows - Local Debugging

Once deployed to the cloud the localdev_serviceprincipal is no longer used. Instead the managed service identity associated with the Azure Functions instance is now used to access the Office 365 Management API, Azure Key Vault and Azure Blob storage. Note that the cloud_serviceprincipal is still used to provide access to the Power BI Admin API's. Also note that the authentication behaviour exhibited by the application is controlled by the UseMSI setting. For cloud deployment this is found in the Azure Function's application settings. For local development this is found in "local.settings.json". When UseMSI is "True" then the application will use the cloud based settings when set to "False" it will use the local development settings. Therefore, this value should be "True" in the Azure Function's application settings and false in "local.settings.json" on your local machine. The figure below depicts the data and authentication flows when the function is cloud deployed.

Data and Authentication Flows - Cloud Deployment

Repository Structure

The source control repository contains a number of sub-folders. For the purposes of this article you only need to look at the "FunctionApp" sub-folder. This folder contains a set of Azure Functions that will gather Power BI Meta data and usage data. The current function list includes:

-

GetPowerBiMetaData - This function provides the functionality to fetch meta data relating to Power BI Workspaces, Groups, Datasets and Reports. It will also write the collected data into an Azure Blob storage container. See the comments within "GetPowerBIMetaData.cs" to get an understanding of the various methods and parameters.

-

GetPowerBiUsageStats - This function will get the O365 audit data from the O365 Api and write it to an Azure Blob storage container. See the comments at the top of "GetPowerBIMetaData.cs" to get an understanding of the various methods and parameters.

Note: There is also a Shared.cs file that contains functionality that is used by both GetPowerBiMetaData & GetPowerBiUsageStats.

The source control repository contains a number of sub-folders. For the purposes of this article you only need to look at the "FunctionApp" sub-folder. This folder contains a set of Azure Functions that will gather Power BI Meta data and usage data. The current function list includes:

- GetPowerBiMetaData - This function provides the functionality to fetch meta data relating to Power BI Workspaces, Groups, Datasets and Reports. It will also write the collected data into an Azure Blob storage container. See the comments within "GetPowerBIMetaData.cs" to get an understanding of the various methods and parameters.

- GetPowerBiUsageStats - This function will get the O365 audit data from the O365 Api and write it to an Azure Blob storage container. See the comments at the top of "GetPowerBIMetaData.cs" to get an understanding of the various methods and parameters.

Note: There is also a Shared.cs file that contains functionality that is used by both GetPowerBiMetaData & GetPowerBiUsageStats.

Detailed Instructions for Development and Deployment

Step One: Create Pre-requisite Azure Services

A. Function App

Create a Function App in Azure and provision a System Assigned Managed Service Identity. I have not provided a script to do this but you can view the documentation at the link below:

Create a Function App in Azure and provision a System Assigned Managed Service Identity. I have not provided a script to do this but you can view the documentation at the link below:

B. Storage Account

Create a storage account and related blob container that will be used to provide an initial storage area for the Power BI meta data and audit log information.

Create a storage account and related blob container that will be used to provide an initial storage area for the Power BI meta data and audit log information.

C. Create Power BI Master User

Create a Power BI "Master User Account" that does NOT have MFA enabled. This user should be a Power BI Admin @ the 0365 tenant level. For additional security you can add an Azure Function to automatically rotate this account's password on a regular basis.

Create a Power BI "Master User Account" that does NOT have MFA enabled. This user should be a Power BI Admin @ the 0365 tenant level. For additional security you can add an Azure Function to automatically rotate this account's password on a regular basis.

D. Key Vault:

Create an Azure Key Vault and create the following secrets:

SecretKey SecretValue ApplicationId Empty String AuthenticationKey Empty String MasterUserName {Power BI Master UserName From C. above} MasterUserPassword {Power BI Master UserPassword From C. above}

Note!! Replace items in curly brackets with your own values.

Create an Azure Key Vault and create the following secrets:

| SecretKey | SecretValue |

|---|---|

| ApplicationId | Empty String |

| AuthenticationKey | Empty String |

| MasterUserName | {Power BI Master UserName From C. above} |

| MasterUserPassword | {Power BI Master UserPassword From C. above} |

Note!! Replace items in curly brackets with your own values.

Step Two: Connect to Azure using an Administrator account.

I am going to guide you through many of the remaining steps with the help of some Powershell scripts. In order to execute these scripts you will first need to login to Azure. To do this launch Powershell ISE and copy the following code block into the script. Execute the script and follow the login prompts (there will be two sets of login prompts). Please note that I am suggesting that you write this in Powershell ISE but you can use whatever Powershell execution environment that you prefer.

#Connect to Azure with Appropriate Credential

Connect-AzureAD

Connect-AzureRmAccount

I am going to guide you through many of the remaining steps with the help of some Powershell scripts. In order to execute these scripts you will first need to login to Azure. To do this launch Powershell ISE and copy the following code block into the script. Execute the script and follow the login prompts (there will be two sets of login prompts). Please note that I am suggesting that you write this in Powershell ISE but you can use whatever Powershell execution environment that you prefer.

#Connect to Azure with Appropriate Credential

Connect-AzureAD

Connect-AzureRmAccount

Step Three: Set Deployment Environment.

This step is simply going to set the desired target environment (eg. Production or Development). Replace the script contents in Powershell ISE with the following commands. Edit based on the environment that you would like to deploy to and execute.

#Determine if deployment is for for Development or Production (Uncomment second line for production deployment)

$Environment = "Development"

#$Environment = "Production"

This step is simply going to set the desired target environment (eg. Production or Development). Replace the script contents in Powershell ISE with the following commands. Edit based on the environment that you would like to deploy to and execute.

#Determine if deployment is for for Development or Production (Uncomment second line for production deployment)

$Environment = "Development"

#$Environment = "Production"

Step Four: Create the required Service Principals.

If you would like the required Service Principals to be created via Powershell script then replace the script contents in Powershell ISE with the following commands. Feel free to rename the service principals by updating the -DisplayName paramters accordingly.

#####Create Service Principals###

$localdev_serviceprincipal = New-AzureRmADServicePrincipal -DisplayName "localdev_serviceprincipal"

$cloud_serviceprincipal = New-AzureRmADServicePrincipal -DisplayName "cloud_serviceprincipal"

$localdev_serviceprincipal_objectid = $localdev_serviceprincipal.Id

$cloud_serviceprincipal_objectid = $cloud_serviceprincipal.Id

$localdev_serviceprincipal_objectid = "{localdev_serviceprincipal_objectid}"

$cloud_serviceprincipal_objectid = "{cloud_serviceprincipal_objectid}"

SecretKey SecretValue ApplicationId {cloud_serviceprincipal_objectid} AuthenticationKey {cloud_serviceprincipal_secret}

If you would like the required Service Principals to be created via Powershell script then replace the script contents in Powershell ISE with the following commands. Feel free to rename the service principals by updating the -DisplayName paramters accordingly.

#####Create Service Principals###

$localdev_serviceprincipal = New-AzureRmADServicePrincipal -DisplayName "localdev_serviceprincipal"

$cloud_serviceprincipal = New-AzureRmADServicePrincipal -DisplayName "cloud_serviceprincipal"

$localdev_serviceprincipal_objectid = $localdev_serviceprincipal.Id

$cloud_serviceprincipal_objectid = $cloud_serviceprincipal.Id

Alternatively, if you would like to create these yourself via another method then simply run the script block below to set the related variables with the appropriate values.

Note!! Replace items in curly brakets with your own values (Do not keep the curly brackets).

$localdev_serviceprincipal_objectid = "{localdev_serviceprincipal_objectid}"

$cloud_serviceprincipal_objectid = "{cloud_serviceprincipal_objectid}"

Now that you have the required Service Principal information go back to your key vault and add in the values for the "cloud_serviceprincipal". To get the AuthenticationKey you will need to generate a value via the portal. Please see this link for details: https://docs.microsoft.com/en-us/azure/active-directory/develop/howto-create-service-principal-portal#get-application-id-and-authentication-key

Note!! Replace items in curly brackets with your own values (Do not keep the curly brackets).

| SecretKey | SecretValue |

|---|---|

| ApplicationId | {cloud_serviceprincipal_objectid} |

| AuthenticationKey | {cloud_serviceprincipal_secret} |

Step Five: Set other required variables

Sample Storage Account Scope: "/subscriptions/{Your-subscription-id}/resourceGroups/{Your-Resource-Group}/providers/Microsoft.Storage/storageAccounts/{Your-StorageAccount-Name}/blobServices/default/containers/{Your-Container-Name}"

Note!! Replace items in curly brackets with your own values (Do not keep the curly brackets).

$msi_objectid = "{Your Azure Function App's MSI objectid}"

$storageaccountscope = "{Scope of your storage account}"

$keyvaultname = "{Your Key Vault Name}"

Sample Storage Account Scope: "/subscriptions/{Your-subscription-id}/resourceGroups/{Your-Resource-Group}/providers/Microsoft.Storage/storageAccounts/{Your-StorageAccount-Name}/blobServices/default/containers/{Your-Container-Name}"

Note!! Replace items in curly brackets with your own values (Do not keep the curly brackets).

$msi_objectid = "{Your Azure Function App's MSI objectid}"

$storageaccountscope = "{Scope of your storage account}"

$keyvaultname = "{Your Key Vault Name}"

Step Six: Set O365 Management API Permissions

Your Service principal OR MSI will need read access to the Activity Feed in the Office 365 Management API. To grant the appropriate permissions use the Powershell script block below:

#Get O365 Management API Service

$o365 = Get-AzureADServicePrincipal -Filter "appId eq 'c5393580-f805-4401-95e8-94b7a6ef2fc2'"

#grant access to Activity Feed Read o365 api role

if($Environment -eq "Development")

{

$o365_User_Object_Id = $localdev_serviceprincipal_objectid

}

else

{

$o365_User_Object_Id = $msi_objectid

}

ForEach ($i In $o365.AppRoles) {

if ($i.Value -eq "ActivityFeed.Read")

{

New-AzureADServiceAppRoleAssignment -Id $i.Id -PrincipalId $o365_User_Object_Id -ResourceId $o365.ObjectId -ObjectId $o365_User_Object_Id

}

}

#Get Assignments

$assignments = Get-AzureADServiceAppRoleAssignedTo -ObjectId $pbi_User_Object_Id

$assignments

#Remove Assignments

ForEach ($i In $assignments) {

Remove-AzureADServiceAppRoleAssignment -AppRoleAssignmentId $i.ObjectId -ObjectId $pbi_User_Object_Id

}

Your Service principal OR MSI will need read access to the Activity Feed in the Office 365 Management API. To grant the appropriate permissions use the Powershell script block below:

#Get O365 Management API Service

$o365 = Get-AzureADServicePrincipal -Filter "appId eq 'c5393580-f805-4401-95e8-94b7a6ef2fc2'"

#grant access to Activity Feed Read o365 api role

if($Environment -eq "Development")

{

$o365_User_Object_Id = $localdev_serviceprincipal_objectid

}

else

{

$o365_User_Object_Id = $msi_objectid

}

ForEach ($i In $o365.AppRoles) {

if ($i.Value -eq "ActivityFeed.Read")

{

New-AzureADServiceAppRoleAssignment -Id $i.Id -PrincipalId $o365_User_Object_Id -ResourceId $o365.ObjectId -ObjectId $o365_User_Object_Id

}

}

Note, if you wish to review permissions use the following code block:

#Get Assignments

$assignments = Get-AzureADServiceAppRoleAssignedTo -ObjectId $pbi_User_Object_Id

$assignments

If you wish to REMOVE ALL assignments use this code block. Note you don't need to run this!!!. It is just there in case you wish to remove assignments!!!.*

#Remove Assignments

ForEach ($i In $assignments) {

Remove-AzureADServiceAppRoleAssignment -AppRoleAssignmentId $i.ObjectId -ObjectId $pbi_User_Object_Id

}

Step Seven: Set Key Vault Access Rights

#Grant Get right on Key Vault secrets to the local dev service principal or msi

if($Environment -eq "Development")

{

$keyvault_User_Object_Id = $localdev_serviceprincipal_objectid

}

else

{

$keyvault_User_Object_Id = $msi_objectid

}

Set-AzureRmKeyVaultAccessPolicy -VaultName $keyvaultname -ObjectId $keyvault_User_Object_Id -PermissionsToSecrets Get

#Grant Get right on Key Vault secrets to the local dev service principal or msi

if($Environment -eq "Development")

{

$keyvault_User_Object_Id = $localdev_serviceprincipal_objectid

}

else

{

$keyvault_User_Object_Id = $msi_objectid

}

Set-AzureRmKeyVaultAccessPolicy -VaultName $keyvaultname -ObjectId $keyvault_User_Object_Id -PermissionsToSecrets Get

Step Eight: Set Storage Account Rights

The MSI and Service Principal will also need the ability to write data into the relevant blob storage account. I used the blob data contributor role but you can custom craft the permissions if you desire additional granularity. Run the Powershell Script block below to grant these rights to your Service Account or MSI.

#Grant Contributor Rights to Storage Account

$StorageAccountAccessList = New-Object Collections.Generic.List[string]

if($Environment -eq "Development")

{

$StorageAccountAccessList.Add($localdev_serviceprincipal_objectid)

}

else

{

$StorageAccountAccessList.Add($msi_objectid)

}

$role = Get-AzureRmRoleDefinition | Where-Object {$_.name -eq 'Storage Blob Data Contributor'}

forEach ($i in $StorageAccountAccessList)

{

# Add user to role

New-AzureRmRoleAssignment -RoleDefinitionId $role.Id -Scope $storageaccountscope -ObjectId $i

}

The MSI and Service Principal will also need the ability to write data into the relevant blob storage account. I used the blob data contributor role but you can custom craft the permissions if you desire additional granularity. Run the Powershell Script block below to grant these rights to your Service Account or MSI.

#Grant Contributor Rights to Storage Account

$StorageAccountAccessList = New-Object Collections.Generic.List[string]

if($Environment -eq "Development")

{

$StorageAccountAccessList.Add($localdev_serviceprincipal_objectid)

}

else

{

$StorageAccountAccessList.Add($msi_objectid)

}

$role = Get-AzureRmRoleDefinition | Where-Object {$_.name -eq 'Storage Blob Data Contributor'}

forEach ($i in $StorageAccountAccessList)

{

# Add user to role

New-AzureRmRoleAssignment -RoleDefinitionId $role.Id -Scope $storageaccountscope -ObjectId $i

}

Step Nine: Set Power BI API Rights

I spent a considerable amount of time trying to get an MSI to work with the Power BI API's but it does not seem to be supported yet. I suspect that it could be made to working by using a user assigned identity rather than a system assigned one but I have not yet tested this theory. As a result the current solution uses a Service Principal to access the Power BI API's. The details of this service principal are stored in the Key Vault.

The service principal needs to be given a high level admin role to allow it to read information from the Power BI API. Grant this role using the Powershell script below.

#Get Power BI Service Reference

$pbi = Get-AzureADServicePrincipal -Filter "appId eq '00000009-0000-0000-c000-000000000000'"

#grant access to Power BI ***Note always the cloud SP****

if($Environment -eq "Development")

{

$pbi_User_Object_Id = $cloud_serviceprincipal_objectid

}

else

{

$pbi_User_Object_Id = $cloud_serviceprincipal_objectid

}

ForEach ($i In $pbi.AppRoles) {

New-AzureADServiceAppRoleAssignment -Id $i.Id -PrincipalId $pbi_User_Object_Id -ResourceId $pbi.ObjectId -ObjectId $pbi_User_Object_Id

}

{

"clientId": "{object id of your cloud_serviceprincipal}",

"consentType": "Principal",

"expiryTime": "2019-11-11T08:31:03.2504886Z",

"id": "MY5uVG9wYka7S9dRzdAgFEkow96MyU9EkXjrxHeDNQy2LZYlsUzHTZHLnTXQv3-l",

"principalId": "{object_id of your power bi master user}",

"resourceId": "{Object Id of your Power BI Service}",

"scope": "App.Read.All Capacity.Read.All Capacity.ReadWrite.All Content.Create Dashboard.Read.All Dashboard.ReadWrite.All Dataflow.Read.All Dataflow.ReadWrite.All Dataset.Read.All Dataset.ReadWrite.All Gateway.Read.All Gateway.ReadWrite.All Report.Read.All Report.ReadWrite.All StorageAccount.Read.All StorageAccount.ReadWrite.All Tenant.Read.All Tenant.ReadWrite.All Workspace.Read.All Workspace.ReadWrite.All",

"startTime": "0001-01-01T00:00:00Z"

}

I spent a considerable amount of time trying to get an MSI to work with the Power BI API's but it does not seem to be supported yet. I suspect that it could be made to working by using a user assigned identity rather than a system assigned one but I have not yet tested this theory. As a result the current solution uses a Service Principal to access the Power BI API's. The details of this service principal are stored in the Key Vault.

The service principal needs to be given a high level admin role to allow it to read information from the Power BI API. Grant this role using the Powershell script below.

#Get Power BI Service Reference

$pbi = Get-AzureADServicePrincipal -Filter "appId eq '00000009-0000-0000-c000-000000000000'"

#grant access to Power BI ***Note always the cloud SP****

if($Environment -eq "Development")

{

$pbi_User_Object_Id = $cloud_serviceprincipal_objectid

}

else

{

$pbi_User_Object_Id = $cloud_serviceprincipal_objectid

}

ForEach ($i In $pbi.AppRoles) {

New-AzureADServiceAppRoleAssignment -Id $i.Id -PrincipalId $pbi_User_Object_Id -ResourceId $pbi.ObjectId -ObjectId $pbi_User_Object_Id

}

Next, the service principal needs to be specifically granted the rights to impersonate the Power BI master user account and read from Power BI as though it was the master user. The fastest way to do this is to navigate to the following link: https://developer.microsoft.com/en-us/graph/graph-explorer#. Login using an admin user. Then select the "POST" method and insert "https://graph.microsoft.com/beta/oauth2PermissionGrants/" into the URL box (this is the URL box to the right of the method selector). Paste the following JSON into the "Request body" section. NOTE!!! You need to replace values below that are in curly brackets with your own values ("clientid", "principalid" & "resourceId"). Also update "expiryTime" as required. The client ID is the objectid of your Service Principal's application (find this in the "Enterprise Applications" section of the Azure Portal). The principalId is the objectid of you Power BI master user. The resourceId is the objectid of your Power BI Service (Retrieve it by running the powershell command Get-AzureADServicePrincipal -Filter "appId eq '00000009-0000-0000-c000-000000000000'")

Once you have done this press the "Run Query" button. You should get a success response.

{

"clientId": "{object id of your cloud_serviceprincipal}",

"consentType": "Principal",

"expiryTime": "2019-11-11T08:31:03.2504886Z",

"id": "MY5uVG9wYka7S9dRzdAgFEkow96MyU9EkXjrxHeDNQy2LZYlsUzHTZHLnTXQv3-l",

"principalId": "{object_id of your power bi master user}",

"resourceId": "{Object Id of your Power BI Service}",

"scope": "App.Read.All Capacity.Read.All Capacity.ReadWrite.All Content.Create Dashboard.Read.All Dashboard.ReadWrite.All Dataflow.Read.All Dataflow.ReadWrite.All Dataset.Read.All Dataset.ReadWrite.All Gateway.Read.All Gateway.ReadWrite.All Report.Read.All Report.ReadWrite.All StorageAccount.Read.All StorageAccount.ReadWrite.All Tenant.Read.All Tenant.ReadWrite.All Workspace.Read.All Workspace.ReadWrite.All",

"startTime": "0001-01-01T00:00:00Z"

}

The final step is to add your master user to your application. To do this find your service principal in the "Enterprise Applications" section of the Azure portal. Next select "Users & Groups" and select "Add user".

Step Ten: Create your local development environment & set your application settings

In this next section I give you a some quick tips relating to developing, debugging and deploying my code templates in VS Code. If you have not developed Azure functions in VS code previously then I strongly suggest that you read the content at this link before continuing: https://code.visualstudio.com/tutorials/functions-extension/getting-started.

In this next section I give you a some quick tips relating to developing, debugging and deploying my code templates in VS Code. If you have not developed Azure functions in VS code previously then I strongly suggest that you read the content at this link before continuing: https://code.visualstudio.com/tutorials/functions-extension/getting-started.

Development Environment

To begin development or deployment first clone the code repository to your local development machine and open the"FunctionApp" folder using Visual Studio Code. The code repository can be found at: https://github.com/jrampono/power-bi-usage-analytics

To begin development or deployment first clone the code repository to your local development machine and open the"FunctionApp" folder using Visual Studio Code. The code repository can be found at: https://github.com/jrampono/power-bi-usage-analytics

Local Settings

For local development you will need to create a "local.settings.json" file and paste in the following JSON. Note!! Replace items in curly brackets with your own values. The final file should NOT include curly brackets.

{

"IsEncrypted": false,

"Values": {

"TenantId": "{Azure TenantId}",

"ApplicationId": "{The LocalDev Service Principal Application ID (Required for Local Dev Only)}",

"AuthenticationKey": "{The LocalDev Service Principal Authentication Key (Local Dev Only)}",

"KeyVault": "{Name of the Key Vault used to store sensitive information}",

"Domain": "{The domain name of the Azure Active Directory that you are using}",

"UseMSI": false

}

}

For local development you will need to create a "local.settings.json" file and paste in the following JSON. Note!! Replace items in curly brackets with your own values. The final file should NOT include curly brackets.

{

"IsEncrypted": false,

"Values": {

"TenantId": "{Azure TenantId}",

"ApplicationId": "{The LocalDev Service Principal Application ID (Required for Local Dev Only)}",

"AuthenticationKey": "{The LocalDev Service Principal Authentication Key (Local Dev Only)}",

"KeyVault": "{Name of the Key Vault used to store sensitive information}",

"Domain": "{The domain name of the Azure Active Directory that you are using}",

"UseMSI": false

}

}

Deploying to the Cloud

When deploying to the cloud, by default the local settings file is not published. Therefore, you will need to manually set the appropriate settings in the cloud once you have published the function. See the animation below for a quick run through of this.

Note that for cloud deployment you only need to set the following Application Settings:

Note that for cloud deployment you only need to set the following Application Settings:

{

"TenantId": "{Azure TenantId}",

"KeyVault": "{Name of the Key Vault used to store sensitive information}",

"Domain": "{The domain name of the Azure Active Directory that you are using}",

"UseMSI: true

}

When deploying to the cloud, by default the local settings file is not published. Therefore, you will need to manually set the appropriate settings in the cloud once you have published the function. See the animation below for a quick run through of this.

Note that for cloud deployment you only need to set the following Application Settings:

{

"TenantId": "{Azure TenantId}",

"KeyVault": "{Name of the Key Vault used to store sensitive information}",

"Domain": "{The domain name of the Azure Active Directory that you are using}",

"UseMSI: true

}

Step Eleven: Testing

I test my functions using the application called Postman but you can test using any tool that will allow you to craft Rest API calls and inspect the associated responses. For each function I've provided sample code in the CURL form. Please remember to remove items in curly brackets and replace with the relevant information for your environment. Also note that I have provided an "x-function-key" in the call. You only need this for calls to your cloud deployed functions and not for calls to VS Code hosted functions (for more details see https://docs.microsoft.com/en-us/azure/azure-functions/functions-manually-run-non-http)

I test my functions using the application called Postman but you can test using any tool that will allow you to craft Rest API calls and inspect the associated responses. For each function I've provided sample code in the CURL form. Please remember to remove items in curly brackets and replace with the relevant information for your environment. Also note that I have provided an "x-function-key" in the call. You only need this for calls to your cloud deployed functions and not for calls to VS Code hosted functions (for more details see https://docs.microsoft.com/en-us/azure/azure-functions/functions-manually-run-non-http)

Function GetPowerBiUsageStats

This function has three valid "Actions" which are all specific to data extraction from the Power BI REST APIs. The actions are listed below along with their respective CURL templates.

Call GetPowerBIMetaData with Action of "GetWorkspacesAndDetails": The first action GetWorkspacesAndDetails executes the entire end to end process of extracting the API data and uploading to blob. For organizations with a large Power BI environment this end-to-end action could take a long time to complete and hence there is a risk that the function will hit the maximum run-time limit's imposed by the Azure service. In such cases you can use the second two action types in combination to "break-up" the action and overcome the associated runtime limits.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIMetaData \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetWorkspacesAndDetails",

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

Call GetPowerBIMetaData with Action of "GetWorkspaces": Returns the list of ALL Workspaces for the entire Power BI tenant.

curl -X POST \

http://{Your Function App Base URL}/api/GetPowerBIMetaData \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetWorkspaces",

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

For Each Workspace Call GetPowerBIMetaData with Action of "GetItemsInWorkspace": Intended to be called iteratively for each item returned by the "GetWorkspaces" action.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetItemsInWorkspace \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetItemsInWorkspace",

"WorkspaceId": {The id of the current workspace in your foreach loop}

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

This function has three valid "Actions" which are all specific to data extraction from the Power BI REST APIs. The actions are listed below along with their respective CURL templates.

Call GetPowerBIMetaData with Action of "GetWorkspacesAndDetails": The first action GetWorkspacesAndDetails executes the entire end to end process of extracting the API data and uploading to blob. For organizations with a large Power BI environment this end-to-end action could take a long time to complete and hence there is a risk that the function will hit the maximum run-time limit's imposed by the Azure service. In such cases you can use the second two action types in combination to "break-up" the action and overcome the associated runtime limits.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIMetaData \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetWorkspacesAndDetails",

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

Call GetPowerBIMetaData with Action of "GetWorkspaces": Returns the list of ALL Workspaces for the entire Power BI tenant.

curl -X POST \

http://{Your Function App Base URL}/api/GetPowerBIMetaData \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetWorkspaces",

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

For Each Workspace Call GetPowerBIMetaData with Action of "GetItemsInWorkspace": Intended to be called iteratively for each item returned by the "GetWorkspaces" action.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetItemsInWorkspace \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetItemsInWorkspace",

"WorkspaceId": {The id of the current workspace in your foreach loop}

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

Function GetPowerBIUsageStats

This function has four valid "Action" parameters all specific to data extraction from the O365 audit logs. The actions are listed below along with their respective CURL templates.

Call GetPowerBIUsageStats with Action of "CreateSubscription": This needs to be called only ONCE per identity. Calling it initiates a subscription in the O365 Management API for the identity that calls it.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIUsageStats \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"CreateSubscription"

}'

Call GetPowerBIUsageStats with Action of "GetLogsAndUploadToBlob": Executes the entire end to end process of extracting the API data and uploading to blob. For organizations with a large volume of Audit events this end-to-end action could take a long time to complete and hence there is a risk that the function will hit the maximum run-time limit's imposed by the Azure service. In such cases you can use the next two action types in combination to "break-up" the action and overcome the associated runtime limits

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIUsageStats \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetLogsAndUploadToBlob",

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

Call GetPowerBIUsageStats with Action of "GetLogContentURIs": Returns the list of ContentURIs available for the current user's subscription. This output of this Action is intended to be iterated over and the "UploadContentToBlob" Action executed for each iteration.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIUsageStats \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetLogContentURIs"

}'

For each ContentURI call GetPowerBIUsageStats with Action of "UploadContentToBlob": Fetches the content associated with the passed in ContentURI and uploads it to Blob storage as a JSON file.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIUsageStats \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"UploadContentToBlob",

"ContentId": {The id of the content. This will have been returned in the list object that you receive from the action GetLogContentURIs},

"ContentUri": {The Uri of the content. This will have been returned in the list object that you receive from the action GetLogContentURIs},

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

This function has four valid "Action" parameters all specific to data extraction from the O365 audit logs. The actions are listed below along with their respective CURL templates.

Call GetPowerBIUsageStats with Action of "CreateSubscription": This needs to be called only ONCE per identity. Calling it initiates a subscription in the O365 Management API for the identity that calls it.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIUsageStats \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"CreateSubscription"

}'

Call GetPowerBIUsageStats with Action of "GetLogsAndUploadToBlob": Executes the entire end to end process of extracting the API data and uploading to blob. For organizations with a large volume of Audit events this end-to-end action could take a long time to complete and hence there is a risk that the function will hit the maximum run-time limit's imposed by the Azure service. In such cases you can use the next two action types in combination to "break-up" the action and overcome the associated runtime limits

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIUsageStats \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetLogsAndUploadToBlob",

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

Call GetPowerBIUsageStats with Action of "GetLogContentURIs": Returns the list of ContentURIs available for the current user's subscription. This output of this Action is intended to be iterated over and the "UploadContentToBlob" Action executed for each iteration.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIUsageStats \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"GetLogContentURIs"

}'

For each ContentURI call GetPowerBIUsageStats with Action of "UploadContentToBlob": Fetches the content associated with the passed in ContentURI and uploads it to Blob storage as a JSON file.

curl -X POST \

http://{Your Function App Base URL}:7071/api/GetPowerBIUsageStats \

-H 'x-functions-key: {Your Azure Function Key}' \

-d '{

"Action":"UploadContentToBlob",

"ContentId": {The id of the content. This will have been returned in the list object that you receive from the action GetLogContentURIs},

"ContentUri": {The Uri of the content. This will have been returned in the list object that you receive from the action GetLogContentURIs},

"BlobStorageAccountName":"{Your Blob Storage Account Name}",

"BlobStorageContainerName":"{Your Blob Storage Container Name}",

"BlobStorageFolderPath":"{Your Blob Storage Folder Path}"

}'

Step Twelve: Clean Up

- Remove service principal (it is only required for development and local debugging)

- Remove service principal (it is only required for development and local debugging)

Next Steps

Enhanced Usage Analysis with Power BI - Part Two: In my next article I will show you how to orchestrate the calling of the functions and the loading of the Azure SQL Database using Azure Data Factory.

Enhanced Usage Analysis with Power BI - Part Two: In my next article I will show you how to orchestrate the calling of the functions and the loading of the Azure SQL Database using Azure Data Factory.

Comments

Powerbi Read Rest